In this page

Csv Analysis feature of GreyCat

CsvAnalysis feature of GreyCat is very fast and powerful type deduction feature, which allows the user to explore millions of Csv file lines just in a matter of seconds.

It allows you to explore:

8,000,000 lines of Csv (1.3 GB, 15 columns) in 4.8s,

Average speed of: 1.7M rows/second (277 MB/s.) with unlimited date check limits.

Dataset

We are using the following dataset to demonstrate the feature of csv analysis:

Patrick Brownsey (November 11, 2023) Natural history specimens collected and/or identified and deposited. https://doi.org/10.5281/zenodo.10110345

mkdir -p data

curl -L https://zenodo.org/records/10110346/files/Q18983320.csv?download=1 > data/dataset.csv

Analysis

To start using CsvAnalysis you can simply call analyze.

Optionally, you can specify a configuration, by creating a CsvAnalysisConfig object, and pass it as a parameter.

The configuration can set parameters for the Csv file, and for the amount of analysis to perform (number of rows, number of checks, etc).

All configuration items are optional, and some have default values (example: row_limit defaults to all rows).

For this type of data we know that there is 1 header line, so we can do this:

use io;

use util;

fn main() {

var file_path = "data/dataset.csv";

var config = CsvAnalysisConfig { header_lines: 1, row_limit: 1000};

var stats = CsvAnalysis::analyze(file_path, config);

// Logging

println(stats);

}

Or rely on the automatic header lines detection:

use io;

use util;

fn main() {

var file_path = "data/dataset.csv";

var config = CsvAnalysisConfig {row_limit: 1000};

var stats = CsvAnalysis::analyze(file_path, config);

// Logging

println(stats);

}

After running analyze() your CsvStatistics instance will contain all the analysis per each column of your Csv file.

type CsvStatistics {

header_lines: int?;

separator: char?;

string_delimiter: char?;

decimal_separator: char?;

thousands_separator: char?;

/// statistics per column

columns: Array<CsvColumnStatistics>;

/// accumulated analyzed (failed + parsed) rows for all accumulated Csv files

line_count: int;

/// accumulated number of failed lines for Csv files

fail_count: int;

/// number of Csv files explored

file_count: int;

}

Alternatively, you can use the update(file_path) method of CsvAnalysis.

You first need to create a CsvAnalysis (empty constructor, or with a configuration as above).

Each call of this method for a file will accumulate the statistics into the object.

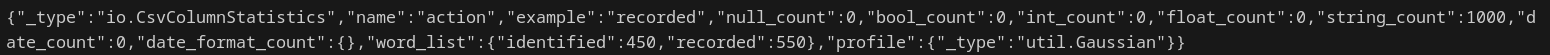

Strings

CsvAnalysis be default tries to infer the value of each cell in the provided Csv file. One of the examples is string type. Let’s print the first column of your statics.

println(stats.columns[0]);

It identifies that your first columns contains 1000 strings. We set the number of rows to analyze to 1000 lines.

By default (no value), all rows will be used.

Enums

In addition, for your first column you can notice that enumerable_count is {"identified":450,"recorded":550}. This shows that in this column, you have repetitive set of keywords such as “identified” and “recorded”. enumerable_limit_default is the property of CsvAnalysis, which sets the maximum number of keys that your enumerable_count property might contain. You can change it while creating your CsvAnalysis instance. (As well as, setting it to -1 in case you want to have every string value in your columns to be considered as Enums)

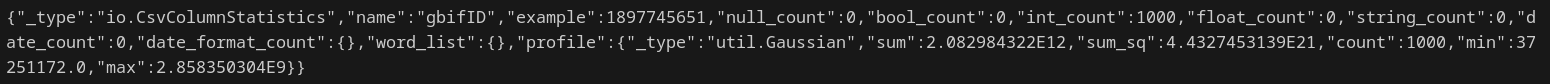

Floats and Integers

Integers and floats are inferred during analysis and Gaussian profile is computed for your columns which contain integers or floats.

// You can print the 1th column of your statistics

println(stats.columns[1]);

As you can see, it calculates the sum, squared sum, min and max values of your inferred numbers.

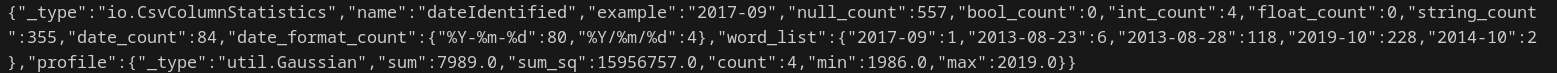

Dates

// You can print the 6th column of your statistics to explore the dates

println(stats.columns[6]);

It has identified 557 null values (Values such which are empty ",," in Csv), 84 date_count values and 300+ enumerable_count enum values.

We could deduct that this column contains dates. It identified only 84 date values, due to default threshold of 100 for date_check_limit property of CsvAnalysis. We can change it and re-run our analyze() function.

Let’s change this threshold and also add a few new date formats on top of our default formats that already exist in the CsvAnalysis feature.

config = CsvAnalysisConfig {

date_check_limit: -1,

date_formats: [

"%Y-%m",

"%Y-%m-%d",

"%Y-%m-%d %H:%M:%S %Z",

],

};

stats = CsvAnalysis::analyze(file_path, config);

// Logging

println(stats.columns[6]);

Now you can see that, almost all the dates are inferred.

Bool

Finally, statistics of your columns will infer how many boolean values you have and set this value to bool_count property.

Generating suitable Csv format instructions (CSvFormat)

Typically, after exploring a Csv file, you proceed to importing the Csv contents.

The import (see CsvReader::new) requires a CsvFormat object, which guides the import into GCL types.

For convenience, the infer method does precisely this:

type CsvFormat {

// ... other methods omitted

@expose

static native fn infer(analysis: CsvStatistics): CsvFormat;

}

After a Csv file has been analyzed, you can call this method to obtain a complete, matching, CsvFormat object. Of course, the proposed format may not be exactly what you need, you can still edit it.

The format can be used with the CsvReader type.

Note that the CsvFormat inferred includes the correct date format, if the column is a date of a known format.

The Csv analysis type allows you to add specific date formats to include when exploring a Csv file.

As an example application of the CsvFormat, you can use CsvReader’s validate() method:

var a = CsvAnalysis::analyze("some_file.csv", null);

var suggested = CsvFormat::infer(a);

// use the format to read the Csv:

var rd = CsvReader::new("some_file.csv", suggested);

// or validate a file with the format:

var valid = CsvFormat::validate("some_file.csv", suggested, null, 10, "errors.csv"); // validate all rows, but log max 10 errors

Despite the exploration, Csv import errors can still occur if the number of columns do not match.

The @expose attribute indicates that this function is exposed to the web interface.

Generating a type for lines of a Csv

The CsvReader type offers a read_to(target) method, which fills in the attributes of the target with each column of a Csv line.

This implies that a suitable target object is created, and passed to the read_to() method.

CsvFormat offers a method to generate a GCL type, to hold the content of a line:

@expose

static native fn generate(format: CsvFormat, ident_col: int?, time_col: int?): String;

The output is a String which contains the GCL code to paste into the part of your program that loads the lines of a Csv.

Csv exploration example

Here is an example of the use of the types and methods above.

use io;

fn explore() {

var cfg = CsvAnalysisConfig {

enumerable_limit: 10, // how many unique strings to report, default is 100

};

var dir = FileWalker::new("data");

var analysis = CsvAnalysis {config: cfg};

if(dir != null){

while(!dir.isEmpty()){

var f = dir.next();

if(f != null){

println("analyzing: ${f.path}");

analysis.update(f.path);

}

}

}

var stat = analysis.statistics;

if(stat != null){

println(stat);

}

// generate the appropriate CsvFormat, for CsvReader:

csv_format = CsvFormat::infer(stat);

println(csv_format);

// optional, verify the inferred format with the validate method

dir = FileWalker::new("data");

while (!dir.isEmpty()){

var f = dir.next();

if (f != null) {

var val = CsvFormat::validate(f.path, csv_format, null, null, null); // null set default values, no rejected file

println("${f.path}: ${val}");

}

}

// finally, generate source code for a type definition, to use with CsvReader::read_to()

// to copy into the GCL program which loads the Csv lines:

var gcl_type = CsvFormat::generate(csv_format, null, null);

println(gcl_type);

}